Creating the 10x Physician – Supporting Clinical Practice with Generative AI - Part 2

Having covered the goals, limitations and regulations of Clinical Decision Support in Part 1, Part 2 looks at the tools that will drive 10x medicine.

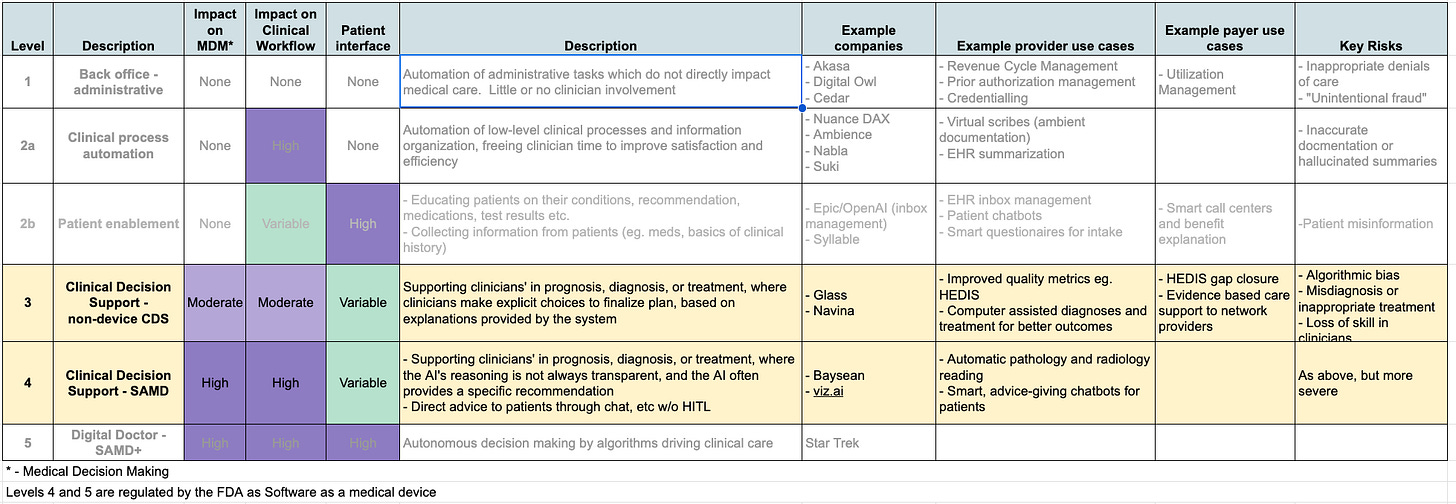

Clinical Decision Support (Levels 3 and 4 of the five levels of of healthcare automation), aim to make care better – improving clinical decisions that directly impact the Triple Aim – Better Outcomes, Better Experience, Lower Cost.

The preceding article on Clinical Decision Support broke down physician activities into 4 major categories - Diagnosis, Assessment, Treatment and Counselling, and went through some of the boundary constraints on GenAI’s impact. Here we will cover how Generative AI can impact all of these areas.

Information access

Since the invention of writing, religion and medicine dominated the use of the written word up until the modern area. From Egyptian papyrus to Galen to Avicenna to Harrison, physicians and medical scientists have recorded their learnings and experiences to pass down to the next generation of practitioners.

The explosion of medical science in the 20th and 21st centuries has led to incredible advancements in human health (vaccines, antibiotics, modern anesthesia, chemotherapy, etc). This speed of advancement has brought new challenges for clinicians, as medical knowledge is being created much faster than any practitioner can absorb. In 1950, the doubling time of medical knowledge was 50 years, in 2021 - 3.5 years. Clinicians have always relied on references, but the nature and way they access these references has shifted. In the initial information revolution, physical textbooks and indexed bound journals were supplanted by digital resources and search strategies, such as PubMed for finding journal articles and curated references such as UpToDate and Medscape for point-of-care guidance.

While they are a vast improvement over searching through a medical library’s stacks while your patient is crashing, these v1.0 digital references are based on a search paradigm that is rapidly going out of style. Just as ChatGPT is rapidly supplanting Google as the best way to answer questions quickly, clinical reference is ready for a GenAI paradigm shift. Searching through UpToDate, PubMed, or any other medical reference entails formulating a query, scrolling through a list of articles or papers, and then reading through the article that seems most germane to figure out what is actually relevant to the patient at hand. It’s a slow and laborious process - but a reliable one, where any significant claim can be traced, reference-by-reference, back to the original literature.

Generative AI, through Retrieval Augmented Generation (RAG) techniques, promises more relevant results in a fraction of the time - it can be the “second brain” doctors have been waiting for. Doing this safely, however, will require a degree of explainability and referencing to mitigate against hallucinations that is not supported by any of the current Large Language Models. PalmyraMed’s “claim detection” functionality is a promising step in this direction, and UpToDate has launched an AI Lab where they are very gingerly experimenting with LLM-search. This is a solvable but currently unsolved problem - and whomever solves it first has the opportunity to own the hyper-profitable $10B medical publishing market. While the goal of GenAI based information access is to improve the quality of care, placing it in the Clinical Decision Support class, it is essentially a better version of a library and not patient specific in a way that would drive SAMD classification, placing it firmly in Level 3.

Diagnosis & Assessment

Diagnoses and Assessment are closely linked, with diagnosis being the assignment of specific classifications to a patient and their disease processes, and Assessment the grading of severity, complexity and risk associated with a patient’s combined diagnoses.

At first glance diagnosis seems like an obvious applications for AI - and there are already impressive anecdotes where ChatGPT has figured out problems that have stumped teams of doctors. With near-infinite knowledge of disease patterns and intense computational power, one might think computers could be the ultimate diagnostician. Startups like glass.ai are building models that generate differential diagnoses to help doctors figure out what could be the root cause of a patient’s problems. But while these tools may be useful in managing rare and complex cases, for language based models this is is a higher-risk, lower-reward use case. Most diagnostic “work” is either not hard for doctors (so they don’t need the help), or quite complex, requiring integration of language, physical exam, imaging and laboratory based information where the datasets required for training don’t currently exist (and would be extremely expensive and time-consuming to generate. The dismal failure of Epic’s Sepsis Algorithm, despite substantial dedication of resources and efforts, has tempered expectations for computer-led diagnosis, at least in the near term. As of October 2023, “no device has been authorized [by the FDA] that uses generative AI or artificial general intelligence (AGI) or is powered by large language models.”

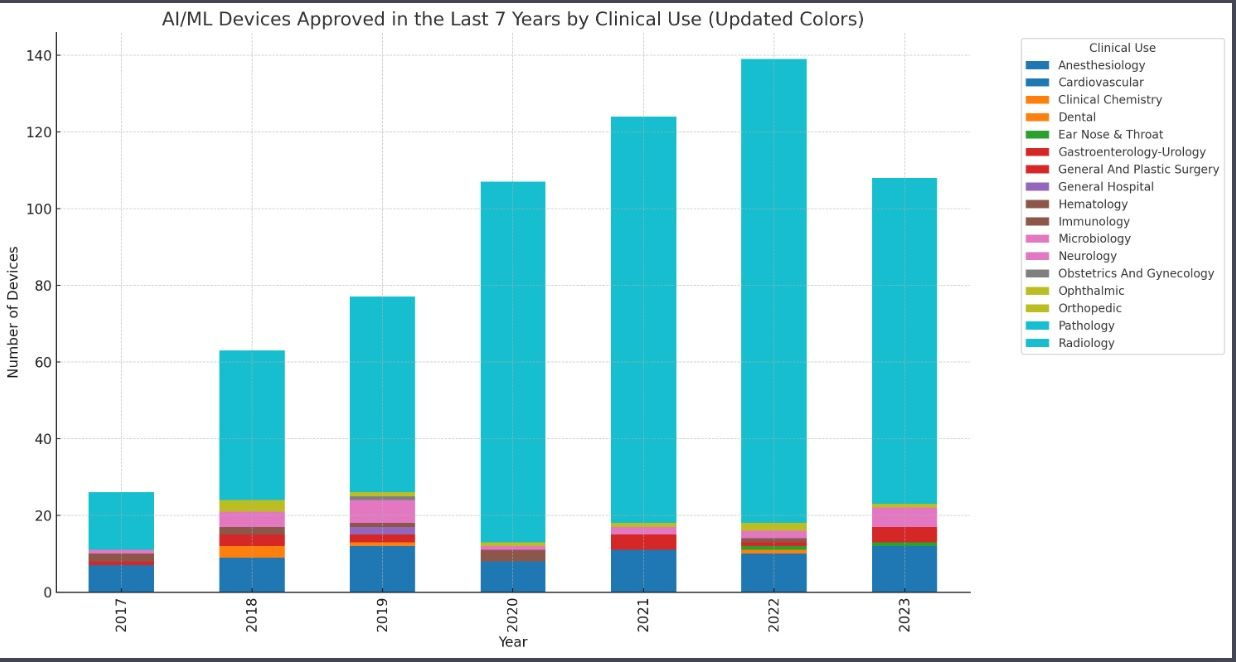

The substantial exception is where diagnosis and assessment is primarily image based. Radiology, pathology and dermatology have been experimenting in this area for years - the vast majority of the 500+ AI based algorithms that have received FDA approval as Software as a Medical Device(SAMD) are radiology based. Companies like viz.ai and aidoc can deliver a preliminary interpretation of imaging studies (often vascular CT scans) in seconds, with high accuracy and consistency. A human radiologist still confirms the findings, but these solutions make sure critical results don’t get delayed waiting for a radiologist to read them, and can also automate the workflow of assembling the multidisciplinary team required to, for example, treat a Large Vessel Occlusion stroke or Intracranial Hemorrhage. Similar systems exist and are in development for reading echocardiograms and pathology images. Imaging-based systems all require FDA approval as SAMD, and are thus all Level 4 solutions, and we can expect more and more of them to receive FDA approval of the coming years.

Treatment

Patient outcomes are driven by treatment decisions. Once a patient has been diagnosed and assessed, the clinician’s job is to do things that change the course of their disease, improving their length and quality of life. This is the area where AI-driven Clinical Decision Support has the greatest potential to change lives. Not every treatment decision needs AI decision support, but there are a few specific areas where GenAI based systems can have an outsized impact.

AI systems can stay up-to-date on the medical literature and manage many more data points about a patient than any human physician, making it an ideal decision support tool for complex decisions with fast changing science, like oncology. It is difficult for even sub-specialist oncologists to keep up with the latest developments in chemotherapy and immunotherapy, where companies like Flatiron and Navya offering various tools to help oncologists deliver care in the one specialty where precision medicine is currently a reality. Other fields, like cardiovascular and metabolic diseases, have aspirations to connect genotypes to phenotypes in a way that can actually drive better care, and when they do, cardiologists and primary care physicians will need AI-driven decision support to keep up with the complexity of treatment choices.

Generative AI is in many ways a disruptive innovation - when applied to a service, it doesn’t advance the “state of the art,” but rather makes almost-as-good performance exponentially cheaper, allowing the servicing of new markets. Today an expert physician, given adequate time, will make a better clinical decision than any AI system currently in existence. However - most treatment decisions are not made by unhurried expert physicians - and performance on care metrics where the right thing to do is obvious is sadly lacking. For example:

Around 30% of eligible Americans don’t get recommended colon cancer screenings, which have been proven to save lives

The problem is not that clinicians don’t know the guidelines of what they should do, but that doing all the work a good primary care physician should do for their patients would keep them busy 26 hours per day! Some of this burden is shared with ancillary staff, but much of it goes undone, to the detriment of our patients.

Thoughtfully applied GenAI can be transformative here. Since the marginal cost of an LLM’s attention to detail is infinitesimal, we can train a system on the best clinicians’ practice patterns and a constantly updating corpus of literature and guidelines, which can then be constantly reviewing the medical record. Such systems will drastically improve the quality of care while decreasing clinician burden. The initial versions will need a high degree of explainability and human clinicians strongly in the loop to function as non-device CDS, but later versions will develop the evidence (and regulatory approval) needed to make stronger recommendations.

Counselling

While licensed clinicians take most of the responsibility for diagnosis, assessment, and treatment decisions, in the end its the patient and their family who have to live with the disease. Among the most human aspects of the art of medicine is in educating patients about their diseases and what to expect, and guiding them in how to cope and manage. I can’t see a time when anyone will want their cancer diagnosis or news of a loved one’s death delivered by an algorithm, but the machines have made remarkable strides in developing humanistic communication skills.

A recent study found ChatGPT communicated more empathetically than doctors in answering patients’ medical questions, and some emergency physicians are already using ChatGPT to explain complex medical concepts to family members in simple, empathetic language. Unlike actual doctors, an AI system has infinite time and patience, and never has to rush off to see another patient who is waiting for them.

Summary

Generative AI has the potential to help doctors make better decisions and to communicate better, driving better health outcomes and happier patients, the things we should care about most. The lowest hanging fruits in this area are:

Helping clinicians find the information they need to make decisions (clinical reference/search)

Supporting high complexity but low acuity decision making for clinical questions that are important but often neglected (such as polypharmacy management)

Counselling patients on the nature of their diseases and how to cope with them, as an adjunct to physician/provider communication

As payers, providers and pharma all feel themselves to be under substantial financial pressure, they are prioritizing solutions that can drive high short-term ROI. As such, in the short term we can expect to see CDS solutions that save clinicians time and improve productivity directly (like Information Access), or that drive utilization of therapies that players care a lot about (like surgery and specialty pharmaceuticals) to attract the most investment and attention in the current wave.

So far we have covered the 4 levels of Healthcare AI Automation that exist in the modern world - Level 5 is mostly science fiction at this point, and can wait for another day. The next set of posts will cover the practical “Killer Apps” and how to deploy them for the key players in the healthcare system - Providers, Payers, Pharma and Patients. Stay tuned!