Creating the 10x Physician – Supporting Clinical Practice with Generative AI - Part 1

Levels 3 and 4 of Healthcare AI Automation will make healthcare better, as well as faster and cheaper.

The first 2 of the 5 levels of of healthcare automation, Administrative and Clinical Process for Providers and Patients, are all about how to save time and money, making processes more efficient. The Clinical Decision Support Levels (3 and 4), also make care better – improving clinical decisions that directly impact the Triple Aim – Better Outcomes, Better Experience, Lower Cost.

Background

Nearly all of what a physician or other healthcare professional does can be divided into 4 key categories:

1. Diagnosis – classifying patients into discrete discrete categories, as “having or not having” a condition – essentially those coded by the WHO’s ICD categories

2. Assessment – More holistic and subjective than diagnosis, assessment provides the color and context to a patient’s condition

3. Treatment – deciding upon and implementing therapies to achieve a desired outcome – usually some combination of curing a disease, alleviating symptoms, and decreasing the risk of bad things happening later

4. Counselling – helping patients understand their condition and make decisions around their goals of care and treatments

The 10x Physician

Generative AI can augment the performance of clinicians in every one of these categories. While we are decades away from machines replacing trained human clinicians, we can start talking about radical improvements in what human physicians can do. The technology industry has long been obsessed with the “10x Engineer” - someone whose training and toolkit gives them almost mythical powers of productivity. Generative AI has the potential to help every doctor become a 10x Physician, by being able to:

Diagnose conditions rapidly and accurately by instantly referencing the complete compendium of medical knowledge against the signs and symptoms of the patient in front of them.

Immediately view and comprehend comprehensive longitudinal multi-modal data on a patient, to assess needs risks with incredible granularity.

Make treatment decisions that are perfectly matched to their patients’ clinical situation and personal preferences - delivering precision medicine at scale.

Assure that their patient have a complete understanding of their medical situation and care, and are involved in treatment decision as much as they desire.

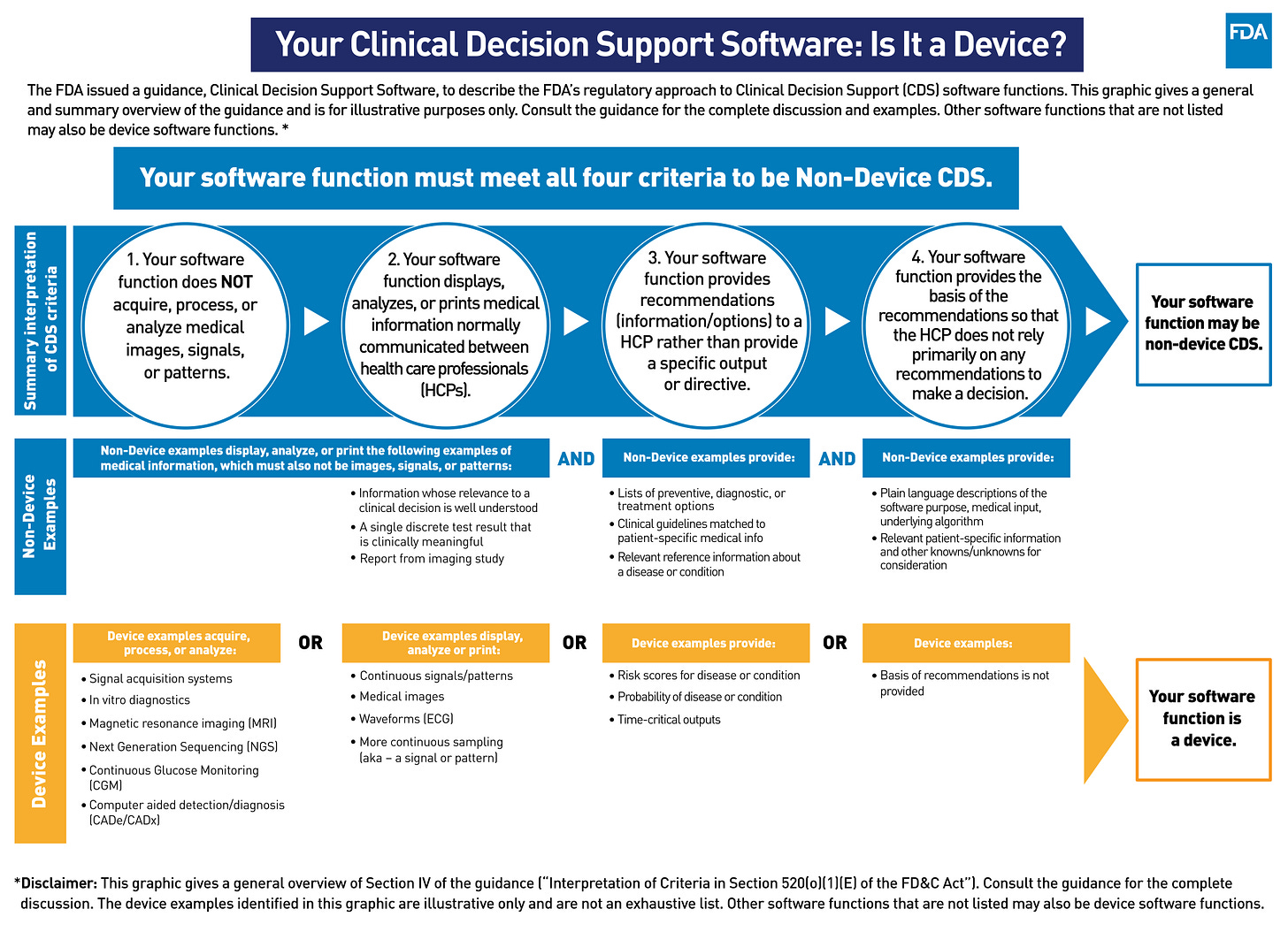

Level 3 vs Level 4 - Complexity and Regulatory Oversight

With great power comes great responsibility - and as we ask machines to take on bigger roles in supporting clinician practice, society in general, and regulators in particular, need to assure this is done in a safe and ethical manner. This is why Healthcare AI Automation of Clinical Decision Support is stratified into 2 levels, differentiated by complexity and level of regulation. Level 3 solutions meet the FDAs criteria for Non-Device Clinical Decision Support (CDS) software, meaning that they do not require explicit FDA approval as Software as Medical Device (SAMD). This means that the system:

1. Does NOT acquire, process or analyze medical images, signals or patterns.

2. Display, analyze or print medical information normally communicated between Health Care Professionals.

3. Provides recommendations (information/options) to a HCP rather than a specific output or directive.

4. Provides the basis of the recommendation so that the HCP does not rely primarily on any recommendation to make a decision.

Any system that does not meet ALL the above criteria requires FDA approval as SAMD, and is thus a Level 4 solution. There is substantial ambiguity in how to interpret these criteria - this is such a new area that the FDA itself isn’t sure how to think about these systems, and we can expect significant trial-and-error in the relationship between developers and regulators over the coming years. For those operating in the CE framework the details are different, but a similar stratification based on risk and complexity still applies.

Limitations

Before delving into the actual applications, it is important to understand how medical AI is fundamentally limited by the data it’s trained on – most of the knowledge required for thoughtful clinical practice has never been articulated in a way that a computer model could understand. LLM developers publicize how well their models do on tests, like the US Medical Licensing Exam (USMLE), but that is a low bar. A human who has just aced their USMLE and started as an intern (the entry-level for post-graduate medical education) is not expected to make any major medical decision without a more trained and experienced Human in the Loop (usually an attending physician or senior resident).

Clinical medicine is deeply multi-modal, and a large part of the secret curriculum for young doctors to is learn to read patients using their eyes and hands. This human-human interface has been honed over millennia, with modern physicians benefitting from wisdom accumulated from Hippocrates to Galen to Avicenna. Even before the advent of the scientific method, germ theory, or modern anatomy, physicians had developed a way of seeing and understanding their patients. Medical residency is the original human deep learning – a generic LLM (the human brain after medical school), is trained on a very large inconsistently labeled multimodal dataset of 10,000-20,000 hours over 3-7 years. At the end, an actual network of neurons has been trained to connect inputs to outputs in a manner thats impossible to describe, but works incredibly well.

While physicians in training see a fraction of the number of patients an LLM could ingest, the data is infinitely richer, with high definition audio, video, and olfactory signals, which a computer today could only see as a faint sad shadow eventually documented in the EHR. An algorithm, despite its billions of parameters and trillions of tokens of training data, does not have access to anywhere near the full information required to understand any significant clinical situation, nor the training required to interpret it. Recent advances in multimodal LLMs are a step towards the technical capacity for this sort of “thinking,” but the requisite medical training data is not yet generated, much less available. This is, in principle, a solvable problem, but it would require generating a massive dataset of hundreds of thousands of hours of high-definition video of patients, linked with sensor data that replace the “touch” component of physical diagnosis, and longitudinal medical data that includes outcomes. No-one is building that dataset just yet (if any investors are interested in funding this, please reach out.)

GenAI solutions, in the near term, can only address the areas of medical care where both the inputs and outputs are text or static-image based. The next articles will detail the more specific implications for augmentation and automation diagnosis, assessment, treatment and counselling but the most important take-away is that no physicians need to worry about losing their jobs to AI anytime soon.